For decades, artificial intelligence (AI) has served as the backbone of automation in banking, financial services, and insurance (BFSI). Predictive algorithms have enabled risk modeling, fraud detection, and customer analytics at unprecedented scales. Yet despite these advancements, a crucial question remains largely unanswered: why does AI make the decisions it does?

Traditional AI systems, while adept at pattern recognition, still function as black boxes—capable of forecasting outcomes but rarely explaining them. In highly regulated sectors like BFSI, this lack of explainability poses a fundamental challenge. Institutions must justify every automated decision, ensure compliance with evolving regulations, and maintain public trust. The future, therefore, demands a new class of intelligence—AI that doesn’t just predict but reasons.

The Shift from Automation to Accountability

Today’s financial ecosystem operates at the crossroads of automation and accountability. Banks process millions of data points every second, insurers verify complex claims in real time, and fintech platforms make split-second lending decisions. Yet all these operations require one critical element—transparency.

This is where Reasoning-as-a-Service (RaaS) emerges as a transformative approach. Rather than replacing existing models, RaaS augments them with a layer of cognitive reasoning, enabling systems to explain, justify, and verify their outputs in human-understandable terms.

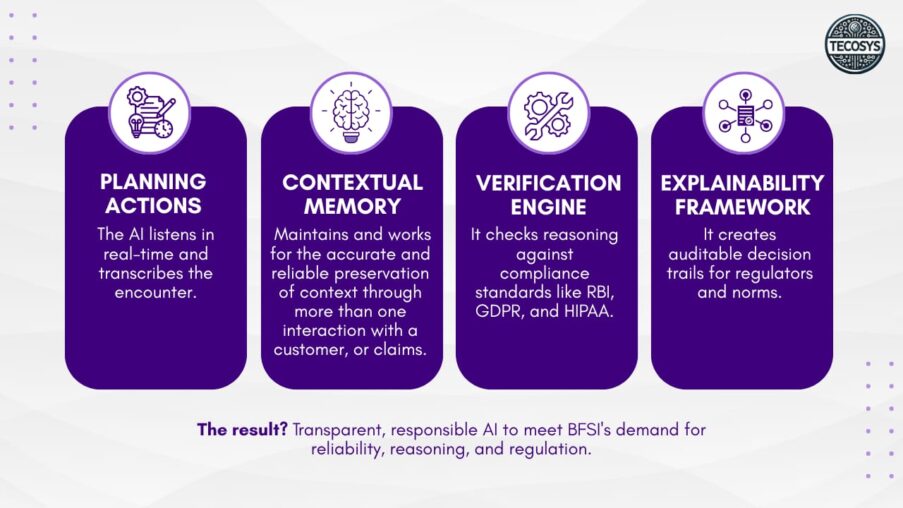

Organizations like Tecosys are pioneering this movement by building cognitive frameworks that make AI explainable, auditable, and compliant for BFSI enterprises. Their RaaS approach, powered by Nutaan AI, demonstrates how reasoning can coexist with automation to enhance decision trust and regulatory transparency.

Understanding Reasoning-as-a-Service (RaaS)

At its core, RaaS is a hosted reasoning layer that integrates with existing AI models, APIs, or enterprise data systems. It interprets complex queries, breaks them down into logical steps, applies contextual understanding, and provides verifiable reasoning trails for every output.

In practice, this could mean:

-

A credit scoring algorithm that not only predicts default risk but explains why a particular borrower may be high-risk.

-

A claims processing system that provides audit-ready reasoning for every approval or rejection.

-

A regulatory engine that ensures AI decisions align with frameworks like RBI, GDPR, and HIPAA.

Such capabilities mark a shift toward explainable AI—a model of intelligence that emphasizes clarity, accountability, and traceability.

Applications Across the BFSI Landscape

Reasoning-based AI introduces new dimensions of reliability and transparency across several BFSI domains:

-

Regulatory Compliance

Financial institutions face increasing scrutiny to demonstrate how automated systems reach their conclusions. RaaS provides structured reasoning trails for each decision, simplifying audits and ensuring compliance without compromising efficiency. -

Fraud Detection

Traditional models flag anomalies; reasoning-based models go further by contextualizing them. They distinguish between truly suspicious patterns and legitimate exceptions—reducing false positives and improving investigative accuracy. -

Risk Evaluation

By embedding reasoning capabilities into risk models, institutions can factor in contextual and behavioral insights alongside historical data, leading to more holistic decision-making. -

Claims Management

In insurance, reasoning engines can cross-check documentation, authenticate facts, and generate justification reports for every claim outcome—strengthening transparency and customer confidence. -

Customer Experience

Cognitive systems with contextual memory can engage with customers dynamically, recalling previous interactions, understanding intent, and proposing reasoned resolutions—ushering in a new era of intelligent financial dialogue.

Balancing Intelligence with Responsibility

As BFSI organizations transition toward cognitive intelligence, they also grapple with the economics of AI adoption. Large language models (LLMs) and complex neural systems often consume vast computational resources, raising costs and privacy concerns. Reasoning-based systems address these issues through:

-

Token Optimization: Reducing redundant reasoning steps and computational overhead.

-

On-Premise Reasoning: Allowing sensitive data to remain within secure enterprise environments.

-

Contextual Search: Transforming static data silos into searchable, reasoning-enabled knowledge networks.

Together, these factors make reasoning intelligence not only smarter but sustainable.

The Cognitive Future of BFSI

The next frontier in financial intelligence will not be defined by how fast AI can predict—but by how well it can reason. The future belongs to systems that can justify every decision, align with every compliance standard, and adapt their reasoning to complex human contexts.

This evolution from prediction to reasoning represents more than a technological shift; it’s an ethical and operational transformation. It bridges the gap between automation and understanding, ensuring that intelligence in finance remains both explainable and trustworthy.

In this cognitive era, BFSI institutions aren’t just building faster machines—they’re building systems that think, verify, and reason responsibly.

Ready to Explore the Future of Cognitive BFSI Intelligence?

Discover how reasoning-driven AI frameworks like those from Tecosys and Nutaan AI are shaping the next generation of BFSI intelligence.

If you’d like to explore or discuss how reasoning-based systems can transform compliance, automation, and decision transparency in your enterprise, book a quick conversation on Calendly.